Prompt Engineering with AI: The Art of Asking for What You Want

In the world of sales, one principle has stood the test of time: the importance of asking for what you want. Whether it’s nudging a prospect towards a deal or clarifying a client’s needs, the clarity of one’s ask can make or break the outcome. Interestingly, the same logic applies in the realm of AI, particularly in the emerging discipline of Prompt Engineering.

What is Prompt Engineering?

At its core, prompt engineering is the science and art of designing effective prompts to guide AI models, specifically language models, to produce desired outputs. It’s akin to finding the right way to ask a question to obtain the most useful answer. Given the vastness of knowledge and patterns an AI model (like Anthropic’s Claude v2) can recognize, how you ask something significantly influences the information you retrieve.

Consider this: you have a knowledgeable friend named Eric (a complete sports nut!) who can answer almost any general question you have about most sports. You can ask Eric just about anything related to rules, play types, or game strategy and he’ll likely have an answer for you, but if you ask super vague or roundabout questions such as “Hey, what’s that one game where they do the thing with the ball and the goal?”, Eric might just give you a puzzled look and ask if you are feeling okay. Similarly, with AI, the clearer and more directive your prompt, the more likely you are to receive a relevant and accurate response.

A few key aspects of prompt engineering:

- Precision: Crafting prompts that are clear and unambiguous. This ensures that the AI understands exactly what’s expected.

- Context: Providing enough context so the AI can make informed and relevant decisions in its responses.

- Iteration: Like any engineering task, prompt crafting is iterative. It often involves tweaking questions, testing results, and refining based on feedback.

- Tailoring for the Application: Depending on the use-case, whether it’s content generation, data extraction, or any other task, prompts will vary. Understanding the end goal is crucial to designing the right prompts.

In the vast universe of AI, where models can understand and generate human-like text, prompt engineering emerges as the compass, guiding these models to produce the outputs we seek.

Why is Prompt Engineering Crucial?

Just as a skilled salesperson tailors their pitch to their audience, prompt engineering focuses on tailoring questions to elicit accurate, relevant, and comprehensive answers from AI models. By refining the way we ask questions, we can harness the true potential of AI systems, allowing them to retrieve more pertinent information, interpret data more accurately, and deliver insights that resonate with end users.

The Right Question Produces Better Results

In Sales: Imagine you’re trying to close a deal. Instead of asking, “Do you have any budget constraints?”, which might lead to a vague response, you might ask, “What’s your budget range for this project?” This more specific question is more likely to yield a direct answer, helping you tailor your offering accordingly.

In AI: When working with a text summarization AI, rather than prompting it with “Summarize this article,” you could ask, “Provide a 3-sentence summary highlighting the main arguments of this article.” The latter will most likely return a concise and focused response, zeroing in on the most vital points.

Retrieval Augmented Generation (RAG): The Game Changer

Going back to our example of Eric being a walking sports encyclopedia, let’s suppose you want to know something about very recent events. For example, you could ask Eric, “How many total passing yards did Jared Goff have in the game this past Sunday?” or “How many sacks has Aidan Hutchinson recorded across both his college and professional careers to date?” Even with Eric’s expansive sports knowledge, it’s impractical to expect him to know every single up-to-date detail across all players and sports. That said, Eric possesses the knowledge on how to search online to find the information necessary to answer the provided questions and ultimately use the data found to produce accurate and relevant answers.

This is exactly what Retrieval Augmented Generation (RAG) does in the realm of AI. Much like how Eric uses online resources to fetch the most recent or detailed data, RAG employs a two-step approach: it first retrieves pertinent information from vast data sources, and then, leveraging that data, it crafts a coherent and contextually accurate response. In essence, RAG combines the best of both worlds - the efficiency of information retrieval and the sophistication of generative models.

The brilliance of RAG lies in its adaptability and breadth. Just as Eric wouldn’t limit his searches to a single website, RAG scans through extensive data sets to find the best matches for a given query. And once the relevant data is retrieved, the generative component ensures that the response isn’t just a data dump, but a well-structured, human-like answer.

In an ever-evolving world where data is constantly updated, the agility and proficiency of RAG are paramount. It ensures that AI models are not just limited to their last training data but can access and leverage the most recent information available. This dynamic approach to information retrieval and response generation is what sets RAG apart and cements its status as a true game-changer in AI applications.

How RAG Works with Prompt Engineering

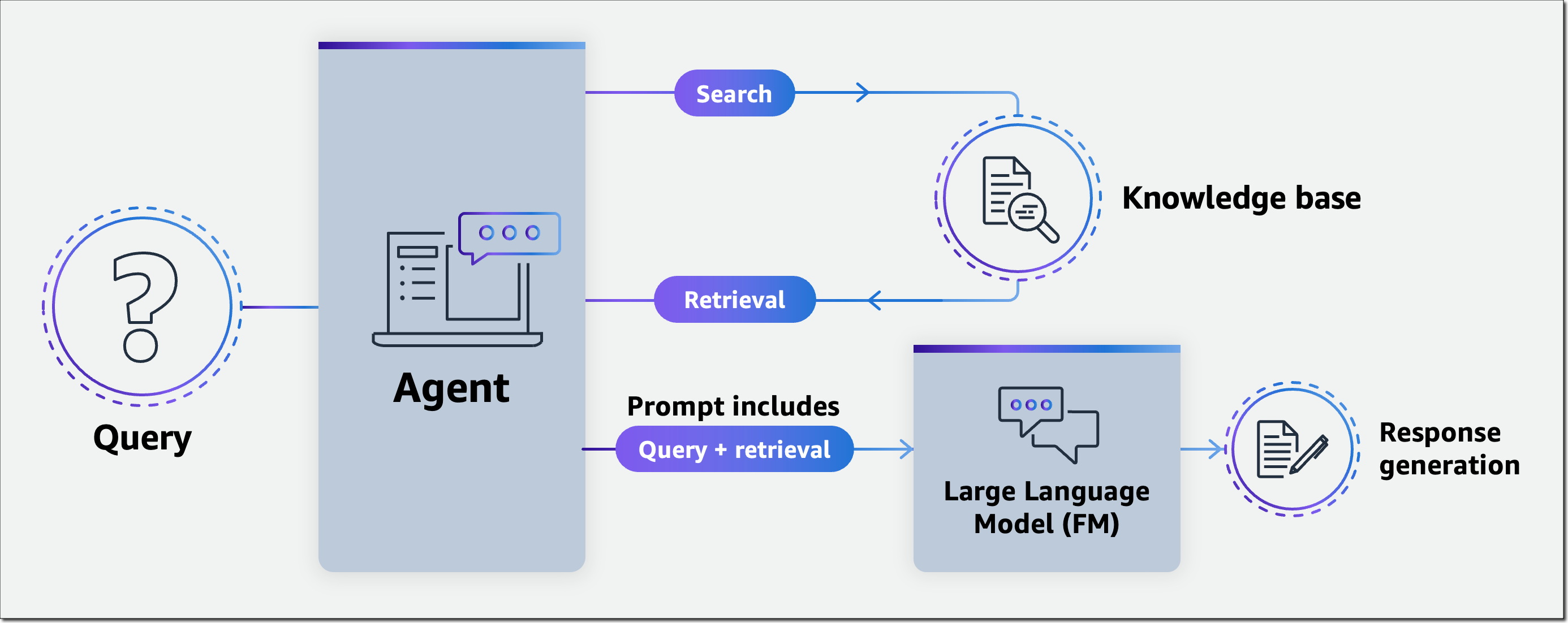

The synergy between Retrieval Augmented Generation (RAG) and prompt engineering is analogous to the relationship between a sports statician’s data collection and a coach’s game strategy. While RAG functions like the statician, gathering fresh statistics and player insights, prompt engineering acts as the coach, using that data to make informed decisions on how to best approach the game. Together, they ensure that AI offers not just an answer, but the most informed and precise one.

Let’s break down the interplay:

- Query Initiation: A question or prompt is posed to the AI system, signaling the start of the retrieval process. Example: “What Detroit Lions Tight End recorded a multiple touchdown game this past Sunday?”

- RAG Retrieval: The RAG system dives into its vast datasets (akin to our sports aficionado Eric scanning the internet) to fetch the most recent statistics or relevant data concerning the question.

- Prompt Refinement: Armed with this fresh information, the AI refines or augments its prompt to extract the most meaningful response from the Language Model (LLM). This is the core essence of prompt engineering – taking raw data and molding it into a more directed question to get the best answer. This is also called in-context learning. Example:

- Original Prompt: “What Detroit Lions Tight End recorded a multiple touchdown game this past Sunday?”

- Refined Prompt After Retrieval: “What Detroit Lions Tight End recorded a multiple touchdown game this past Sunday? Brock Wright had 3 receptions, for 16 yards and no touchdowns. Sam LaPorta had 3 receptions, for 47 yards and 2 touchdowns.”

- Response Generation: With this refined prompt, the AI model crafts a coherent, specific, and up-to-date response. Example: Sam LaPorta of the Detroit Lions scored multiple touchdowns against the Carolina Panthers in the NFL game this past Sunday.

The transformation of the prompt, post-RAG retrieval, showcases how vital prompt engineering is in directing the AI towards more accurate and relevant responses. By incorporating the latest information, the AI doesn’t just regurgitate stored data; it actively interacts with it, using prompt engineering techniques to ensure the most contextual and accurate answers.

How AWS Simplifies Prompt Engineering With RAG

Amazon Web Services (AWS) has always been at the forefront of simplifying complex processes for developers and engineers. With the introduction of Amazon Bedrock, AWS has taken a significant leap in making the Retrieval Augmented Generation (RAG) process more accessible and efficient for prompt engineering.

Seamless Integration with Knowledge Bases

One of the primary challenges with RAG is the need to connect with vast and diverse knowledge bases to retrieve relevant information. AWS addresses this by providing seamless integration capabilities with Amazon Bedrock. Engineers can effortlessly connect foundation models to their company’s data sources, ensuring that the AI has access to the most recent and pertinent data.

Agents for Amazon Bedrock

AWS introduced Agents for Amazon Bedrock, a feature that acts as intermediaries between the AI models and the data sources. These agents facilitate the retrieval process, ensuring that the AI gets the most relevant snippets of information from the connected databases. This not only speeds up the retrieval process but also ensures that the data fetched is of high relevance to the posed query.

Simplified Prompt Refinement

With the data retrieved through Agents, AWS provides the data that assists in refining the prompts for in-context learning. This means that engineers don’t have to manually sift through the retrieved data to craft the perfect prompt. Agents can implement prompt refinements, making the process of prompt engineering more intuitive and less time-consuming.

Focus on Prompt Engineering, Not Infrastructure

One of the significant advantages of using AWS for RAG-based prompt engineering is the shift of focus. Engineers can concentrate on crafting the best prompts without worrying about the underlying infrastructure. AWS handles the heavy lifting of data retrieval, storage, and processing, allowing engineers to focus solely on the art of asking the right questions.

Scalability and Performance

As with all AWS services, scalability and performance are at the core of their offerings. Whether you’re dealing with small datasets or vast knowledge bases, AWS ensures that the RAG process remains smooth and efficient. This means that as your data grows or your queries become more complex, AWS’s infrastructure can handle it without any hiccups.

Parting Thoughts

In the realm of sales, the power of a well-phrased question or a precisely tailored pitch can be the difference between sealing a deal and losing a potential client. The essence of this principle is the art of asking for what you want, and doing so with clarity and precision. As we’ve explored, this very principle finds its parallel in the world of AI, especially in the discipline of prompt engineering.

Just as a salesperson meticulously crafts their questions to understand a client’s needs, prompt engineering refines the way we communicate with AI models, ensuring we extract the most valuable insights. The introduction of technologies like Retrieval Augmented Generation (RAG) and the advancements brought by AWS further amplify the importance of asking the right questions. They bridge the gap between static knowledge and dynamic retrieval, much like a seasoned salesperson who knows when to pull from their experience and when to seek out new information to address a client’s unique needs.

In both sales and AI, the quality of the outcome is often a direct reflection of the quality of the ask. As we continue to push the boundaries of what AI can achieve, it’s evident that the art of asking, refined over centuries in human interactions, will remain a cornerstone in our journey. Whether you’re closing a deal or querying an advanced AI model, remember: it’s not just about what you ask, but how you ask it.

Source:

Source: